# Hello!

I’m Michael:

- 5th-year Computer Science PhD student advised by Chris Ré.

- Labmate at HazyResearch, Stanford AI Lab, Stanford Machine Learning Group.

I’m currently excited about making AI more robust, reliable, and capable. How do we build on our progress in foundation models + model efficiency to unlock new kinds of capabilities (agentic stuff), while making them robust + reliable enough to be useful?

As a bonus, can we automate this learning via self-improving systems (learning from past mistakes, learning to improve their own efficiency)?

Before the PhD I received my A.B. in Statistics and Computer Science at Harvard in 2020. I’m grateful to have worked with Serena Yeung, Susan Murphy, and Alex D’Amour on computer vision and reinforcement learning in healthcare.

# Research

The Hedgehog & the Porcupine: Expressive Linear Attentions with Softmax Mimicry

Michael Zhang, Kush Bhatia, Hermann Kumbong, and Christopher Ré

ICLR 2024

[NeurIPS ENLSP 2023 Oral]

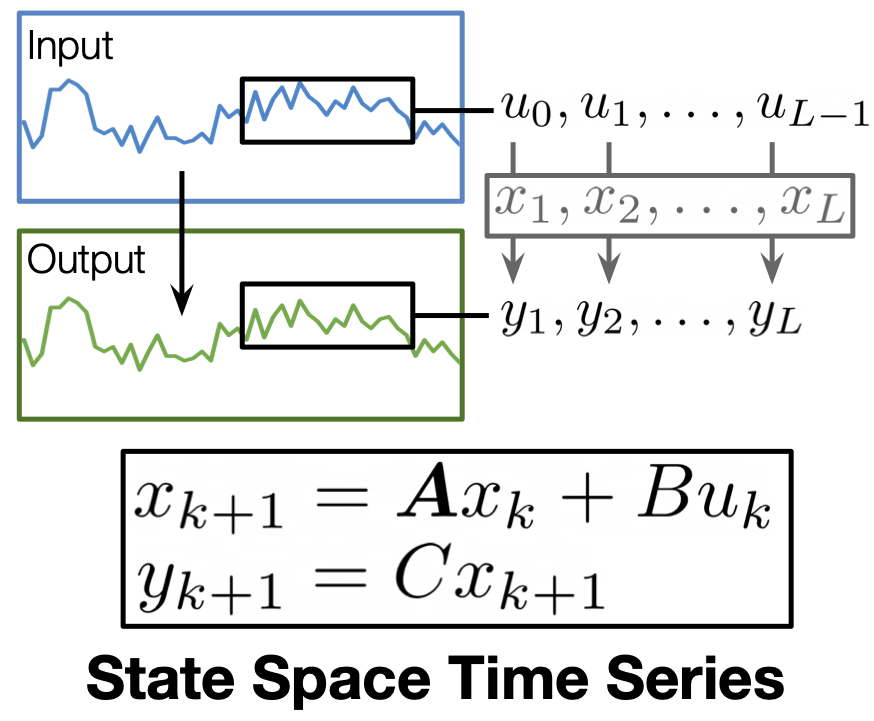

Effectively Modeling Time Series with Simple Discrete State Spaces

Michael Zhang*, Khaled Saab*, Michael Poli, Tri Dao, Karan Goel, and Christopher Ré

ICLR 2023

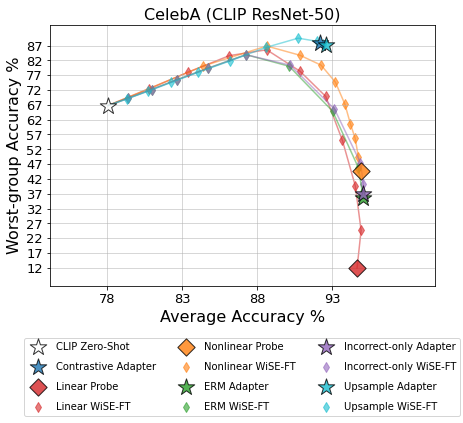

Contrastive Adapters for Foundation Model Group Robustness

Michael Zhang and Christopher Ré

NeurIPS 2022

Correct-N-Contrast: a Contrastive Approach for Improving Robustness to Spurious Correlations

Michael Zhang, Nimit S. Sohoni, Hongyang R. Zhang, Chelsea Finn, Christopher Ré

ICML 2022

[Long Talk]

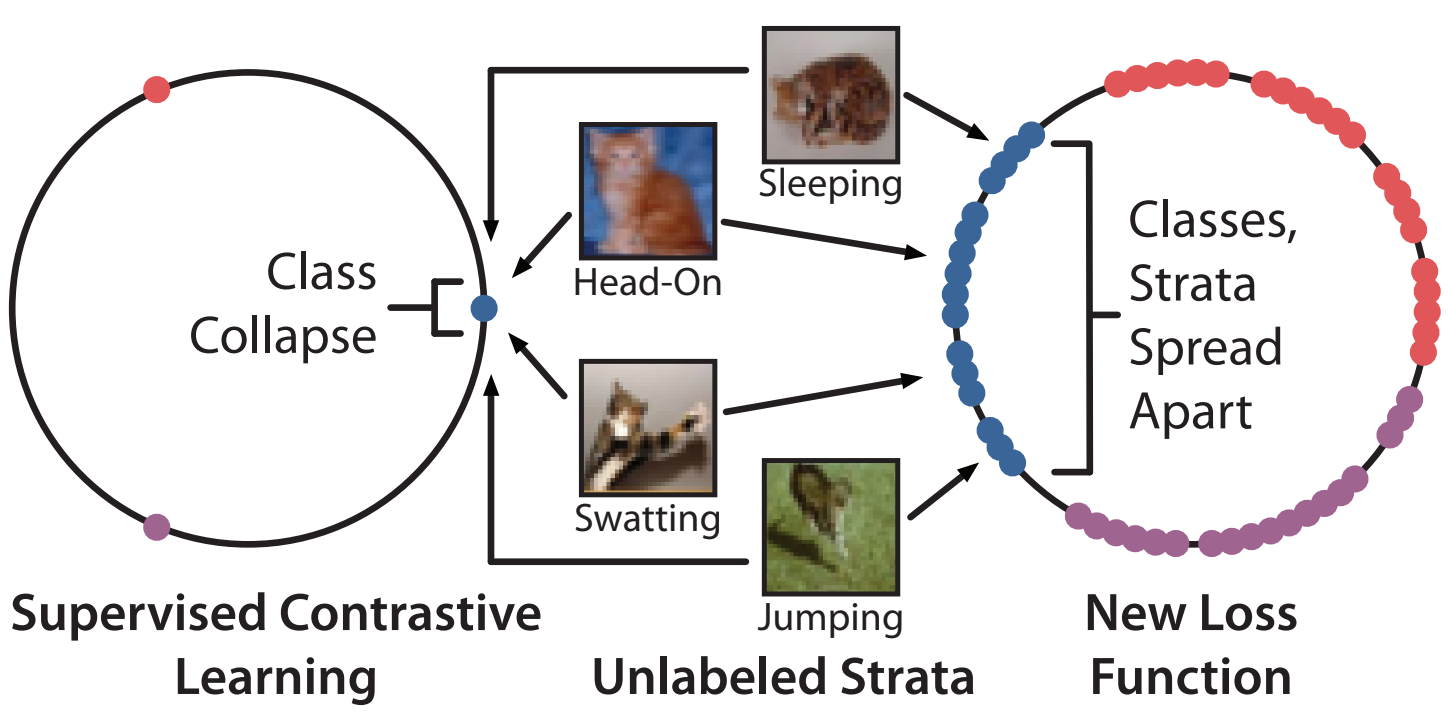

Perfectly Balanced: Improving Transfer and Robustness of Supervised Contrastive Learning

Mayee F. Chen*, Daniel Y. Fu*, Avanika Narayan, Michael Zhang, Zhao Song, Kayvon Fatahalian, Christopher Ré

ICML 2022

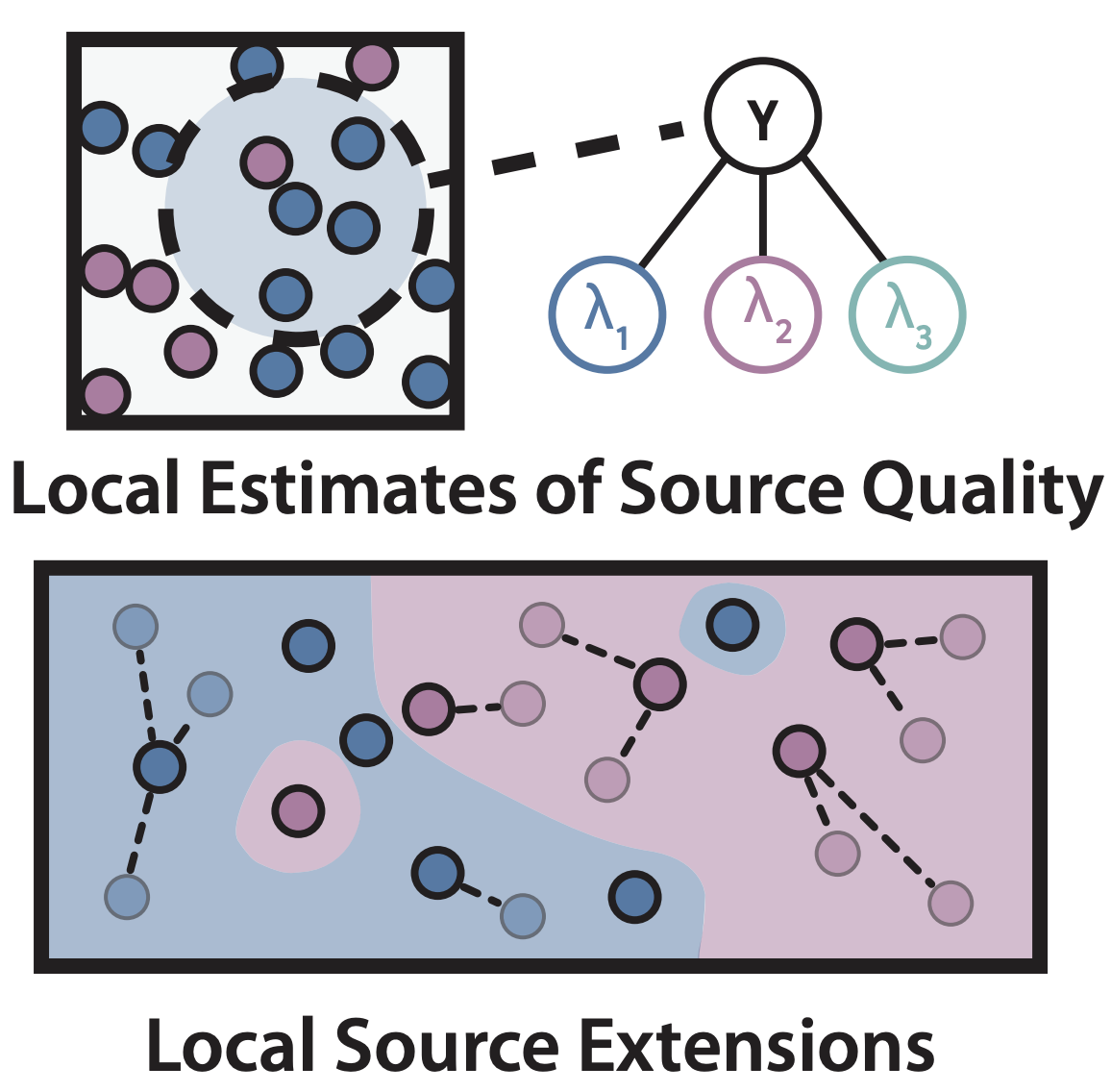

Shoring Up the Foundations: Fusing Model Embeddings and Weak Supervision

Mayee F. Chen*, Daniel Y. Fu*, Dyah Adila, Michael Zhang, Frederic Sala, Kayvon Fatahalian, Christopher Ré

UAI 2022

[Oral]

Personalized Federated Learning with First Order Model Optimization

Michael Zhang, Karan Sapra, Sanja Fidler, Serena Yeung, José M. Álvarez

ICLR 2021